If you’re running an online store with WooCommerce, you may have noticed that add to cart buttons generate dynamic URLs. These URLs can be crawled by search engine crawlers and bots, which can lead to issues with indexing and SEO. In this article, I will provide you with some tips on how to prevent WooCommerce add to cart dynamic URLs from crawling and indexing.

One way to prevent bots from crawling add to cart URLs is by disallowing them in your robots.txt file. This will prevent search engine crawlers like Googlebot and Bingbot from indexing these pages.

Let’s see how

Table of Contents

Understanding Dynamic URLs in WordPress

As an eCommerce platform, WooCommerce uses dynamic URLs to create unique product pages for each item in a store’s inventory. Dynamic URLs contain variables and parameters that generate content based on user input or database information. They are often used in conjunction with add-to-cart buttons to enable customers to purchase products directly from a product page.

What are Dynamic URLs?

Dynamic URLs are URLs that contain variables and parameters that generate content based on user input or database information. They are used to create unique product pages for each item in a store’s inventory.

For example, a dynamic URL might include a product ID, a category, or a price range.

When a customer clicks on a product page, the dynamic URL generates the appropriate content based on the variables and parameters in the URL.

Why are Dynamic URLs Used in WooCommerce?

Dynamic URLs are used in WooCommerce to create unique product pages for each item in a store’s inventory. This allows customers to view and purchase products directly from a product page, rather than having to navigate through multiple pages to find what they are looking for. Additionally, dynamic URLs allow store owners to track customer behavior and generate analytics data that can be used to improve the shopping experience and increase sales.

While dynamic URLs can have a negative impact on SEO, there are ways to mitigate these issues. For example, store owners can use canonical tags to indicate the preferred URL for a piece of content, or they can use URL parameters to create cleaner, more readable URLs. Additionally, store owners can use robots.txt files to prevent search engines from crawling certain pages or directories.

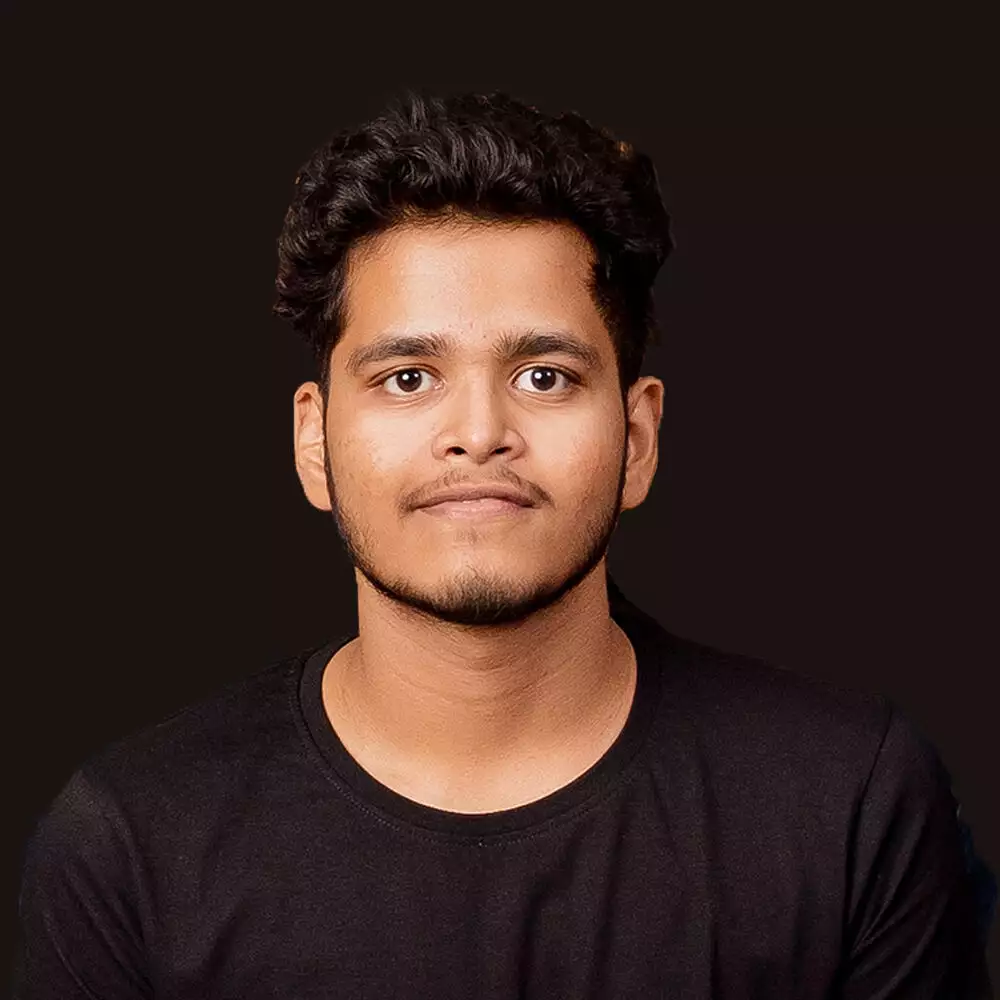

How to Identify If Google has crawled your Website’s Dynamic URLs?

To identify if Google has crawled your website’s dynamic URLs using the Google Search Console :

- Log in to your Google Search Console account and navigate to the “Index” tab.

- In the “Index” tab, you’ll see a list of all the pages on your website that Google has crawled and indexed. Look for your dynamic URLs in this list.

- If your dynamic URLs are included in the list, then Google has crawled them. You can click on each URL to see more information about its indexing status and any issues that Google may have encountered while crawling it.

You can also use Technical SEO tools like Screaming Frog or Website Auditor.

Why Prevent Dynamic URLs from Crawling?

As a WooCommerce website owner, it is essential to understand the impact of dynamic URLs on your website’s SEO. Dynamic URLs are URLs that contain parameters and change dynamically based on user input or session information. Crawling dynamic URLs can negatively affect your website’s SEO and lead to duplicate content issues.

What Happens When Dynamic URLs are Crawled?

Crawling dynamic URLs can cause several issues for your WooCommerce website. Here are a few examples:

- Search engines may index multiple versions of the same page, leading to duplicate content issues.

- Crawling dynamic URLs can cause search engines to ignore or overlook some of your website’s pages.

- Crawling dynamic URLs can also lead to crawl budget wastage, where search engines spend time crawling unnecessary pages instead of important pages.

How Does Crawling Dynamic URLs Affect SEO?

Crawling dynamic URLs can significantly impact your website’s SEO. Here are a few ways:

- Dynamic URLs can make it challenging for search engines to understand the structure of your website and the hierarchy of your pages.

- Dynamic URLs can lead to duplicate content issues, which can hurt your website’s ranking and visibility in search engine results pages.

- Crawling dynamic URLs can also lead to crawl budget wastage, where search engines spend time crawling unnecessary pages instead of important pages, which can affect your website’s overall ranking and visibility.

Therefore, it is crucial to prevent search engines from crawling dynamic URLs to ensure that your website’s SEO is not negatively impacted.

Preventing Dynamic URLs from Crawling

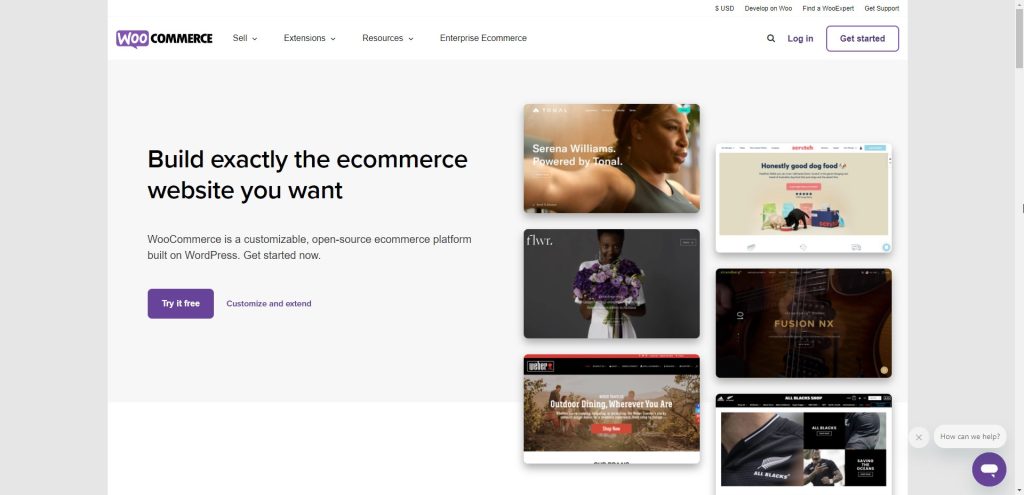

To prevent Search Engine crawlers to seeing the dynamic add to cart and other WooCommerce links we will be using robot.txt file

Using the Robots.txt File

One of the simplest ways to prevent search engines from crawling dynamic URLs is by using the robots.txt file. This file is a standard used by search engines that tells them which pages to crawl and which ones to avoid.

To prevent dynamic URLs from being crawled, you can use the “Disallow” directive in your robots.txt file. For example, if you want to prevent search engines from crawling all URLs that contain the word “add-to-cart”, you can add the following line to your robots.txt file:

# Preventing WooCommerce Dynamic URLS

User-agent: *

Disallow: *?add-to-cart=*

Disallow: *?remove_item=*

Disallow: *?removed_item=*

Disallow: *?undo_item=*This will tell search engines to avoid any URLs that contain the “add-to-cart, remove_cart, removed_item and undo_itme” strings.

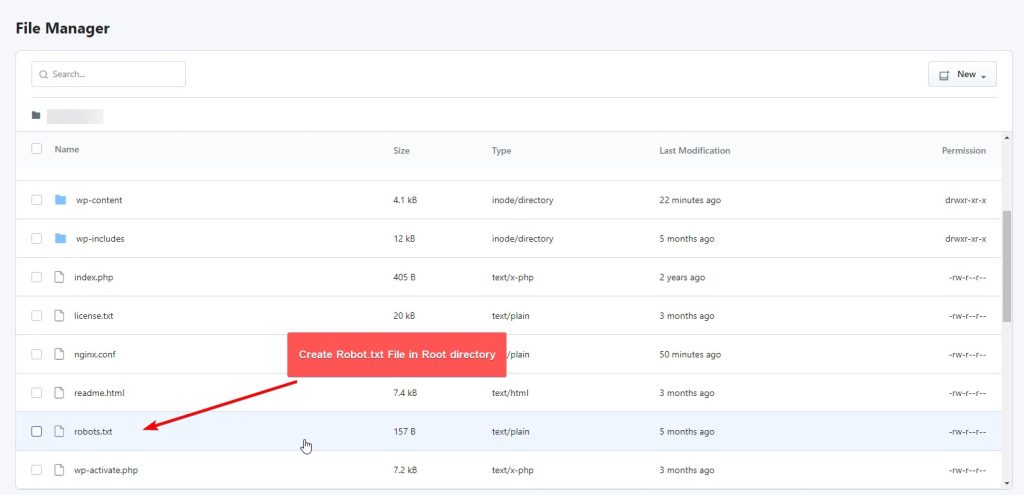

Creating Robots.txt in WordPress Root Directory

If you are using WordPress, you can easily create a robots.txt file in the root directory of your website. To do this, simply create a new file called “robots.txt” and add the “Disallow” directive as described above. You can also use plugins like Yoast SEO to generate a robots.txt file automatically.

Testing Robot TXT from Google Search Console

After creating your robots.txt file, it is important to test it to make sure it is working correctly. You can use this Robot TXT checker tool to test your robots.txt file content and see if you find any errors.

To do this, go to the “robots.txt Tester” tool in the Google Search Console and enter the URL of your robots.txt file. The tool will then show you any errors or warnings and give you suggestions on how to fix them.

I share my Learnings & Case studies via email.

Subscribe to Stay Updated

Conclusion

Overall, preventing dynamic URLs from being crawled is an important aspect of SEO for any WooCommerce website. By using the methods outlined in this article, you can ensure that your website is crawled efficiently and without any duplicate content issues.